This is a cumulative posting, started in March 2018, that covers the realities of “AI”. I will be sharing observations and various snippets that illustrate that the latest advancements in neural networks and machine learning do not constitute “intelligence”. A much better definition for the acronym AI is “Augmented Intelligence”.

And always remember the famous quote: “Artificial intelligence is no match for natural stupidity.”

Update

While most pundits highlight the nefarious nature of “AI” in images of people being physically harmed in a way that is reminiscent of the movie, Terminator, the more realistic (and near-term) harm is the centralized control of information and the associated projection of “reality”.

It is essential to take a step back and recognize the power of information in everyone’s life, and the fact that the information we consume establishes our notion of reality. I like to point out to my family that the only direct form of sensing reality is with your five senses: sight, hearing, touch, taste, and smell. That is, I can walk outside my house and see if it’s sunny or cloudy, determine the approximate temperature, and observe any activity on my residential street. Other than that, my reality for the day is formed by my computer, cell phone, and/or television.

I have a related and long-running posting started in 2018 entitled, “Do US Tech Companies Have Too Much Influence?“. This is about Microsoft, Apple, Google, Facebook, Amazon, etc. (the same folks pushing AI as the master solution for society) where success of their business is inherently based on the idea that you give up your privacy and control for the promise of a “better life”. Their business models are inherently supportive of centralized control information and a strong administrative state.

Since the most common and practical use of AI is as a more efficient search tool, the source of information is falling into much less diversity and more centralized control. That is, the LLMs adopted by the large tech companies are now the “Oracles of Delphi” in providing answers to questions (including all the biases of the LLM maintainers).

A recent editorial from Gary Marcus, “AI bot swarms threaten to undermine democracy” identifies some of the very likely manipulations that we are already experiencing:

Automated bots that purvey disinformation have been a problem since the early days of social media, and bad actors have been quick to jump on LLMs as a way of automating the generation of disinformation. But as we outline in the new article in Science we foresee something worse: swarms of AI bots acting together in concert…

No democracy can guarantee perfect truth, but democratic deliberation depends on something more fragile: the independence of voices. The “wisdom of crowds” works only if the crowd is made of distinct individuals…

Because humans update their views partly based on social evidence—looking to peers to see what is “normal”—fabricated swarms can make fringe views look like majority opinions. If swarms flood the web with duplicative, crawler-targeted content, they can execute “LLM grooming,”

December 27, 2025

We are now starting to see the consumer pushback against the unfulfilled promises of AI. There are masses of people fed-up with Microsoft Copilot interfering in all the functions in the Microsoft Office world. In the latest instance of anguish, it is the users of the open source Firefox browser. The money quote expressing disdain with AI features being forced on the users:

“I’ve never seen a company so astoundingly out of touch with the people who want to use its software.”

October 30, 2025

Having lived through the “Dot-Com era” almost 25 years ago with the great expectations of internet technology and crazy company valuations, the current promises of AI tend to lead to a jaundiced view of the economy. While it is not exactly the same, the “AI era” has many similarities (“history may not repeat itself but it certainly rhymes”). Scott Galloway highlights some of the potential for great downsides from the US economy and its dependence on the promise of AI:

If the Chinese can take down the valuations of the top 10 AI companies — if those 10 companies fall 50, 70, even 90% like they did in the dot-com era — that would put the U.S. in a recession and put Trump out of vogue.

How can they do that? Pretty easily. I think they’re going to flood the market with cheap, open-weight models that require less processing power but are 90% as good. The Chinese AI sector, acting under the direction and encouragement of the CCP, is about to Old Navy the U.S. economy: They’re going to mess with America’s big bet on AI and make it not pay off.

October 22, 2025

Here is a news headline that should be of interest to those following AI:

Meta lays off 600 employees within AI unit

Meta will lay off roughly 600 employees within its artificial intelligence unit as the company looks to reduce layers and operate more nimbly, a spokesperson confirmed to CNBC on Wednesday.

October 18, 2025

Well, here you go… From the famous “community” web site Reddit, some comments from a moderator about the negative performance of AI-related chatbots:

It was recently brought to our attention that “Reddit Answers” – reddit’s new attempt at an AI assisted search tool – has been spreading grossly dangerous misinformation to the posters and commenters of this sub. This is a new feature in reddit in the past year. This AI is automatically presenting content to r/familymedicine users who post/comment – typically in something related to what was posted. Moderators cannot disable this feature in the sub.

October 17, 2025

The real change in the “AI industry” is the bifurcation in expectations and accountability. On one hand, the AI promoters have accepted that the LLM models are easily manipulated and often hallucinate their answers. The result is that these AI companies, such as Google, are now stamping warning labels on the results – something like, “AI is not perfect. You need to check the results”.

At the same time, end users are starting to expect near perfection when using the AI tools and chatbots. For example, when casual users were asked “Should companies be held legally liable for the advice that their AI chatbots give if following the advice results in harmful outcomes?” it was almost 80% across the board “probably/definitely should”.

I would say these are some conflicting perspectives…

October 6, 2025

Interesting comments about the major investments in so-called AI by the CEO of OpenAI:

Altman commands a half-trillion-dollar startup that’s the tip of the spear for an out-of-control AI gold rush. Pretty much the entire world economy is tangled up in the hundreds of billions of investment being poured into the industry.

Illustrating just how all-devouring AI is, here’s this worrying statistic provided by the Wall Street Journal: in the US, capital expenditures for AI contributed more to growth in the economy in the past two quarters than all of consumer spending…

There must be a pony in there somewhere.

September 8, 2025

We are going to start seeing all sorts of videos and explanations about the limitations of these various LLMs

August 11, 2025

There are quite a few techno-pundits that are very strong naysayers when it comes to AI, and specifically large language models (LLM) such as Grok and ChatGPT. One of the most succinct comments I have found is: “People are wising up that LLMs are not in fact AGI-adjacent.”

We also have the financial community now expressing concerns that the reality of the AI-LLM generation is not all that it is promoted to be. This concern is accentuated by the fact that trillions of dollars have been invested in the promise AI-LLM. Some folks are referencing the over-valuation of the many AI businesses in a fashion similar to the dot-bomb era of 2001.

I frankly limit my use of these various “AI” tools to tasks benefiting from efficient search methods. Since Perplexity does a nice job of providing transparency on its sources of information, it is my tool of choice (an agent, co-pilot, or whatever you want to call it). It definitely reduces my time searching for information, and provides an audit trail and references, which I can easily pursue for a deeper dive.

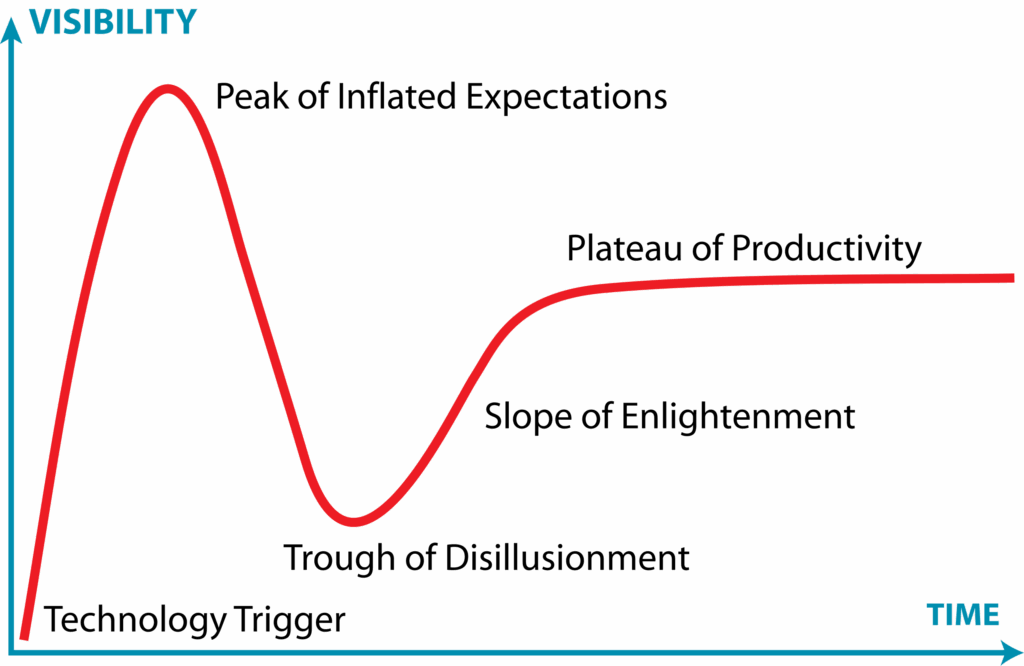

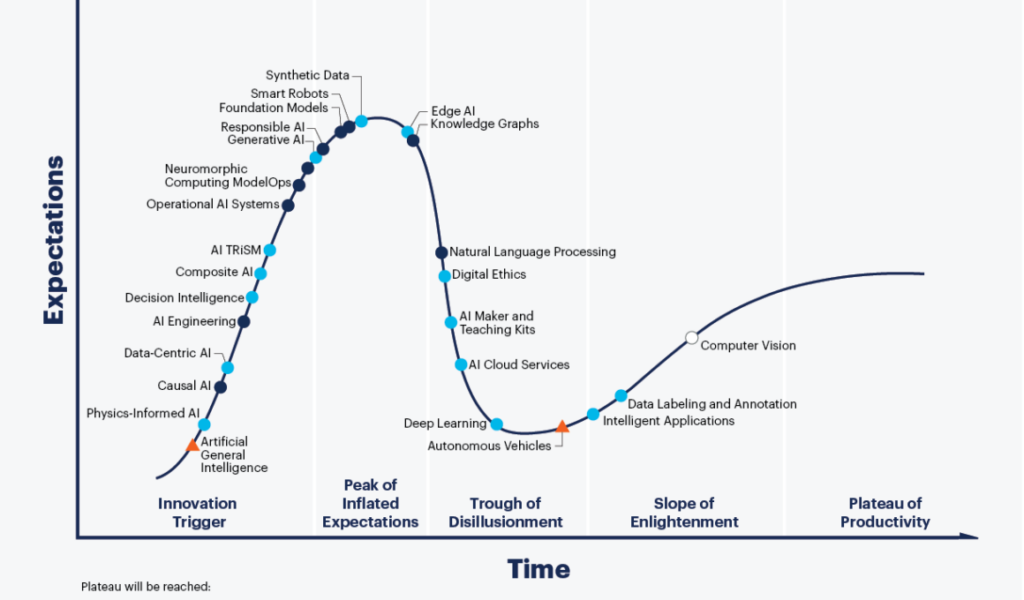

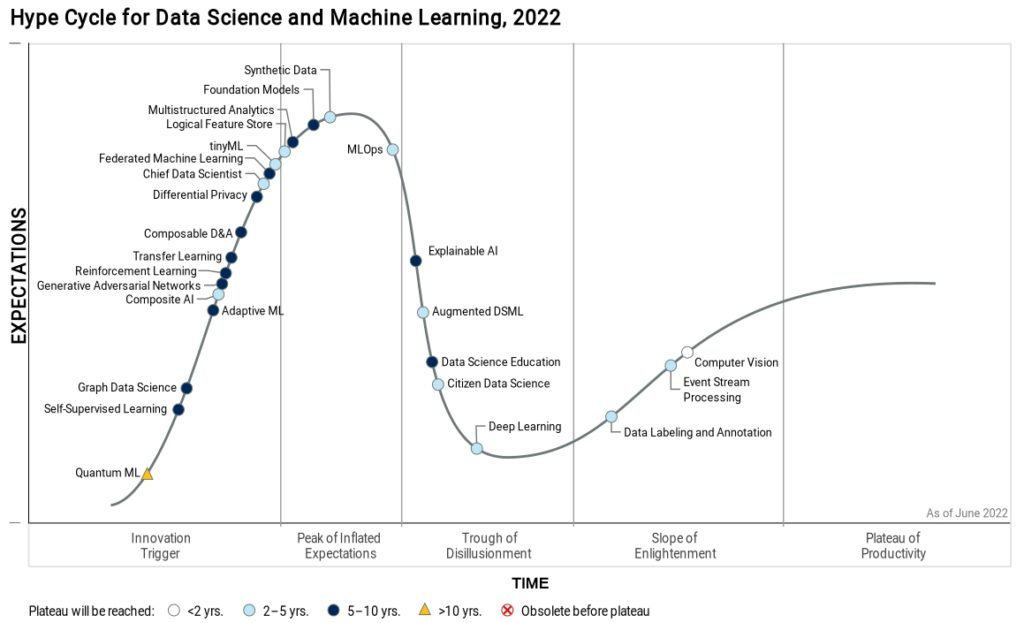

This requires a refresher course on the Gartner technology enthusiasm curve (see below) where AI-LLM is on a course heading for the “peak of inflated expectations”, soon to be followed by the “trough of disillusionment”. We will ultimately hit a useful steady state at the “plateau of productivity” where everyone concludes AI-LLM is not the promised miracle but it provides useful benefits for a defined set of use cases.

May 15, 2025

As indicated numerous times in this cumulative blog posting, the current incarnation of AI has useful capabilities, but it does not enable “intelligence”. Here is a good recent summary from a company executive involved in building AI products:

“The technology we’re building today is not sufficient to get there,” said Nick Frosst, a founder of the AI startup Cohere who previously worked as a researcher at Google and studied under the most revered AI researcher of the last 50 years. “What we are building now are things that take in words and predict the next most likely word, or they take in pixels and predict the next most likely pixel. That’s very different from what you and I do.” In a recent survey of the Association for the Advancement of Artificial Intelligence, a 40-year-old academic society that includes some of the most respected researchers in the field, more than three-quarters of respondents said the methods used to build today’s technology were unlikely to lead to AGI.

November 14, 2024

The latest comments from Gary Marcus:

Sky high valuation of companies like OpenAI and Microsoft are largely based on the notion that LLMs will, with continued scaling, become artificial general intelligence. As I have always warned, that’s just a fantasy. There is no principled solution to hallucinations in systems that traffic only in the statistics of language without explicit representation of facts and explicit tools to reason over those facts.

Here is another related viewpoint expressed by a pragmatic commenter:

No matter how fast you travel east or west, you’ll never reach the north pole. AI as currently understood runs afoul of Gödel’s incompleteness theorem. You simply cannot achieve machine consciousness through neural nets alone.

The human brain doesn’t use only neurons to process data. There is a second, completely different calculation and communication system in the glial cells, which can be influenced by and themselves influence the operation of neurons, through diffuse chemical communication rather than localized electrical activity. We’re not accounting for glial cells at all.

Instead we’ve been throwing more and more computing power at neurons and expecting Gödel to just not matter.

I recall reading a book on AI some 30 years ago where the author was comparing the performance of digital computing versus the human brain for some common analytical tasks. The author’s method of comparison was based on the amount of energy (measured in Joules) required by the brain versus a computer. It wasn’t even close. The biological brain required a fraction of what the computer required.

At the time, I didn’t think much about that comparison… Let’s fast forward to 2024 and review all the discussions about needing small nuclear reactors to provide the energy for these GPUs… It’s now very relevant.

October 28, 2024

Here is another technology soothsayer weighing in on the prospects of the current incarnation of AI: Linux creator Linus Torvalds proclaimed that the current AI industry is 90% marketing and 10% reality. Torvalds said he would basically ignore AI until the hype subsides, predicting meaningful applications would emerge in five years.

“I think AI is really interesting, and I think it is going to change the world. And, at the same time, I hate the hype cycle so much that I really don’t want to go there.”

October 8, 2024

A recent article in CIO magazine/web site talks about “12 Famous AI Disasters”. Here are a few snippets:

while actions driven by ML algorithms can give organizations a competitive advantage, mistakes can be costly in terms of reputation, revenue, or even lives…

After working with IBM for three years to leverage AI to take drive-thru orders, McDonald’s called the whole thing off in June 2024. The reason? A slew of social media videos showing confused and frustrated customers trying to get the AI to understand their orders.

…

In February 2024, Air Canada was ordered to pay damages to a passenger after its virtual assistant gave him incorrect information at a particularly difficult time.

…

In 2014, Amazon started working on AI-powered recruiting software to do just that. There was only one problem: The system vastly preferred male candidates. In 2018, Reuters broke the news that Amazon had scrapped the project.

September 28, 2024

In the February 2023 addition to this posting, I included the graph for the “technology hype cycle” — it starts with great expectations, followed by disillusionment, and finally settles in on pragmatic use.

Now that LLMs are entering the Trough of Disillusionment (see chart above), Many pundits are now coming forward and explaining the reason that there are unrealistic expectations for ChatGPT, Gemini, Copilot, etc.:

LLMs aren’t even remotely designed to reason. Instead, models are trained on vast amounts of human writing to detect, then predict or extend, complex patterns in language. When a user inputs a text prompt, the response is simply the algorithm’s projection of how the pattern will most likely continue… LLMs are designed to produce text that looks truth-apt without any actual concern for truth.

August 1, 2024

Since I am actively involved in using deep learning tools to help solve complex computing problems, I certainly see some benefits when they are deployed as another tool in the tool box — instead of some magical AI panacea. In fact, I rarely start a discussion about our work by describing our efforts as “AI”.

There are three relatively interchangeable terms that are used to describe the major challenge to AI — or any IT or digital system — that provide some level of automation. It’s those things that happen relatively infrequently and haven’t been anticipated by the designer of the automation system. These terms are:

- Edge cases

- Outliers

- Long tail

It’s a way of describing the application situation (e.g., self-driving cars) as having many scenarios with subtle variations (“use cases”, if you want to use the IT jargon). The more edge cases, the more complex the system and the more likely the system will have failures.

Here is the key summary on this topic from Dr. Gary Marcus, which just happens to be the basis for his current cynicism with the latest incarnation of AI:

GenAI sucks at outliers. If things are far enough from the space of trained examples, the techniques of generative AI will fail.

The solution or work-around is to limit the edge cases for the application (e.g., self-driving cars the only operate on designated lanes on the interstate), or keep the human in the loop to handle those edge cases (e.g., driver has to remain in the driver seat to handle situations that are outside the training data).

April 22, 2024

The CIO web site has an article entitled, “10 Famous AI Disasters“, which nicely summarizes some of the blunders and failures of machine learning systems. These range from Air Canada being ordered to pay damages after its virtual assistant gave a passenger incorrect information, to Zillow writing down millions of investments in housing when their machine learning algorithm failed at predicting home prices.

February 21, 2024

You may have heard or seen recently that many of these large language models, such as ChatGPT and Gemini, are exhibiting illogical behavior. For example, Gemini (from Google) refused to show photos of any Caucasian people while ChatGPT is providing answers with crazy gibberish: “grape-turn-tooth over a mind-ocean jello type”. Gary Marcus shares his usual pragmatic perspective:

In the end, Generative AI is a kind of alchemy. People collect the biggest pile of data they can, and (apparently, if rumors are to be believed) tinker with the kinds of hidden prompts that I discussed a few days ago, hoping that everything will work out right…

The reality, though is that these systems have never been been stable. Nobody has ever been able to engineer safety guarantees around them. We are still living in the age of machine learning alchemy…

As long as you keep a reasonable scope on these machine learning and natural language programming tools, they can be very helpful. When you start expecting these advanced information technology tools to replace the robust problem solving of a human with an IQ of 100, that’s when you start getting into trouble.

November 30, 2023

The president of Microsoft said there is no probability of super-intelligent artificial intelligence being created within the next 12 months.

“There’s absolutely no probability that you’re going to see this so-called AGI, where computers are more powerful than people, in the next 12 months… It’s going to take years, if not many decades, but I still think the time to focus on safety is now.”

November 24, 2023

There is an interesting article on efforts at AGI – Artificial General Intelligence:

Researchers at the University of Cambridge have developed a self-organizing, artificially intelligent brain-like system that emulates key aspects of the human brain’s functionality.

…

Remarkably, the artificially intelligent brain-like system began to exhibit flexible coding, where individual nodes adapted to encode multiple aspects of the maze task at different times. This dynamic trait mirrors the human brain’s versatile information-processing capability.

October 3, 2023

Here is commentary from a writer less convinced that AI is bringing the holy grail:

This will be an unpopular take: I believe AI is teetering on the edge of a disillusionment cliff... I know what you’re thinking. How could that be? After all, AI is booming. The past couple of weeks in AI news have been two of the most exciting, exhilarating and jam-packed…

But hear me out: Along with the fast pace of compelling, even jaw-dropping AI developments, AI also faces a laundry list of complex challenges… And after decades of experience with technology developments, from email and the internet to social media — and the hype and fallout that has accompanied each one — making that happen might not be the societal slam-dunk that Silicon Valley thinks it is.

August 26, 2023

The AI industry has coined the dubious term “Generative AI” to describe the verbal-style of output returned from running these large language models (LLM), such as GPT, PaLM, LLaMa, etc. While this capability certainly has the ability to impact the internet search methods to which we have become accustomed, it likely has limited impact on many of the promises promoted by the folks currently involved with AI.

Rather than looking at AI as offering some mysterious brain power, it is best to view many of the methods of AI (machine learning, natural language processing, etc.) as advanced information technology capabilities. That is, there are some very useful applications of these technologies, but it’s not of the magnitude of “artificial general intelligence” or AGI.

As Gary Marcus noted in a recent ACM article:

…if the premise that generative AI is going to be bigger than fire and electricity turns out to be mistaken, or at least doesn’t bear out in the next decade… the whole generative AI field, at least at current valuations, could come to a fairly swift end.

Can all this overpromising result in a third “AI winter” (see April 16, 2020 posting below).

May 2, 2023

It’s interesting that Elon Musk is the most cynical about the dangers from the adoption of AI. Based on some of the disappointing and deadly results from the promises of Tesla auto-drive, he knows first-hand that promoting computer-based technology as a panacea is very dangerous. Evidently, since 2019 there have been 17 deaths from Tesla Autopilot mode. Bear in mind that these deaths were not the result of some malevolent AI technology electing to kill humans. Instead, it was a result of expecting too much from AI where the “intelligence” was insufficient to support a human in a life threatening situation.

My takeaway is very simple: just like you can’t yet rely on so-called AI to automatically (and safely) drive your car for you, we shouldn’t yet depend on it carrying out complex tasks without human reasoning in the decision loop. This is especially true for tasks that have a “long tail distribution“, i.e., what some people refer to as having many “edge cases” that require advanced reasoning skills. Driving an automobile on any type of road surface has many complex situations that can arise, which depends upon more advanced inferencing and reasoning to properly handle.

April 19, 2023

I don’t yet see AI efforts such as ChatGPT causing the end of the world quite like Mr. Musk prognosticates. However, I do see these large language models having an impact on business and social interactions. This will manifest itself in ways that are similar to the impact that pervasive digital email and browsers had on our lives. That is, these advances in messaging and image display technology, circa the 1990s, initially drove the transaction cost of advertising and information sharing to near zero. This had a tremendous impact on selected business models (e.g., traditional paper-based media and advertising) and changed the way people interacted and socialized.

The problem with ChatGPT is rooted in two particular areas:

1) ChatGPT is not artificial intelligence. I view these large language models as a form of Dustin Hoffman in the movie Rain Man — a savant for recalling everything captured in the great “information cloud”, but mentally deficient and with no ability to differentiate fact from fiction. As Gary Marcus and Ezra Klein noted, “What does it mean to drive the cost of bullshit to zero”?

2) ChatGPT will be manipulated for nefarious purposes. The development teams have apparently incorporated political biases into the recall mechanisms, and numerous users have discovered that ChatGPT has a penchant for synthesizing information that doesn’t really exist. We already have a concentration of information power in the Big Tech companies. What is going to happen when the masses of people consider the bullshit machine as the “Oracle of Delphi” for all information?

April 15, 2023

People are assuming that ChatGPT performing well on common exams (ACT, Bar, etc.) is tantamount to possessing great intelligence and reasoning skills. In reality, the large language models that power ChatGPT are based on the superlative capabilities of computers to store and retrieve large amounts of information:

Memorization is a spectrum. Even if a language model hasn’t seen an exact problem on a training set, it has inevitably seen examples that are pretty close, simply because of the size of the training corpus. That means it can get away with a much shallower level of reasoning. So the benchmark results don’t give us evidence that language models are acquiring the kind of in-depth reasoning skills that human test-takers need — skills that they then apply in the real world.

February 24, 2023

I don’t recall where I came across the chart below, but it does a nice job explaining where the various “data science” and “AI” technologies stand in regards to the changing expectations.

The chart illustrates how new technologies are introduced with great expectations of “solving world hunger and peace”; which is naturally followed by the disillusionment of the technology not being a “silver bullet”; and finally reality sets in that there is value in the technology, but it’s not going to “save the world”.

As a matter of reference, we are deeply involved in using Transfer Learning, Tiny ML, Synthetic Data, Deep Learning, Data Labeling, Event Stream Processing, and Computer Vision.

February 18, 2023

It’s important to understand that these interactive chat tools (Microsoft’s Sydney, Google’s Bard, or OpenAI’s ChatGPT) appear to have the conversational traits of a live human. Overlooking for the moment some of the highly publicized failures of these systems, it is important to recognize what these chatbots really do in answering questions, offering recommendations, etc.

In reality, the chat tools are systems that leverage what computers do best — recalling and processing petabytes of information (“Big Data”) such as all the details stored in Wikipedia. These capabilities are deployed to provide the appearance of a knowledgeable human, but these systems based on “Large Language Models” do not have cognitive capabilities (e.g., understanding cause and effect relationships).

In addition, since these tools are so dependent on public web sites for their “training” data, these chat systems reflect the biases and politically tainted information (e.g., Wikipedia has become notorious for their lack of credibility on many topics). As a result, it’s important to not overlook the essential observation: “Most computer models solidify and provide false support for the understandings, misunderstandings, and limitations of the modelers and the input data.” Thus, chatbots do not posses AGI and they are not the Oracle of Delphi.

This assessment doesn’t mean to imply that these chat systems are useless. It’s just important to understand their real intelligence capabilities and inherent limitations.

January 21, 2023

To distinguish the subtleties of computer-based intelligence, it appears that the new acronym is now “AGI” – Artificial General Intelligence. AGI implies a machine capable of understanding the world as well as any human, and with the same capacity to learn and carry out a wide range of tasks…That’s a reasonable definition. Obviously, this posting recognizes that is an important distinction.

In the meantime, we’ve seen the release of ChatGPT and all the excitement about its seemingly intelligent ability to behave like a human. Unfortunately, while it may be robust enough to easily pass the Turing Test and generate news commentary, it is nothing close to AGI.

As prognosticator Rodney Brooks has noted about ChatGPT:

“People are making the same mistake that they have made again and again and again, completely misjudging some new AI demo as the sign that everything in the world has changed. It hasn’t.”

January 7, 2023

The American Medical Association (AMA) is now touting that AI should be called ‘Augmented Intelligence’. My assumption is that this positioning is a result of the disappointments from IBM Watson Healthcare AI and the many other far-reaching promises that resulted in failures. The AMA now claims:

AI is currently practiced by about one in five physicians. Additionally, three out of five are excited about the adoption of this technology in the future…

artificial intelligence (AI) could truly support doctors and make them “less fallible.” This could serve as most value during times of stress and weariness, which are so frequent in the medical field.

December 12, 2022

The notion of 100% automation, 100% of the time is very daunting. Even though nature and mathematics have taught us about asymptotic limits and the law of diminishing returns, people are still in pursuit of this nirvana.

There are two technology-related developments occurring right now that will have significant implications on the latest public perceptions of AI. One feature that these two have in common is that both of these technologies are not ready to support 100%-100%.

1) The promise of driverless vehicles is running into reality, whereby the quantity and variety of edge cases encountered by a driver really does require the abilities of “Artificial General Intelligence”. This has most famously been manifested by some of the accidents with vehicles using Tesla autopilot. The automobile companies and investors are slowly backing away from self-driving in much the same way that IBM backed-away from Watson AI for 100% automated healthcare.

2) The “generative” AI systems that create stories and images that appear to be human-created (e.g, ChatGPT, Dall-E, etc.) are going to be good enough to “throw a monkey in the wrench” for the already chaotic social networks. It’s going to be similar to the introduction of email in marketing — that is, using these system to generate “news and information” isn’t going to be high-quality, but it will be so low cost that there is going to be a flood of disinformation.

One of the most pointed comments about ChatGPT came from a literary editor at Spector who wrote:

What OpenAI has produced seems to me to be a marvelously clever toy, rather than a quantum leap, or a leap of any sort, in the direction of Artificial General Intelligence. It’s a very sophisticated Magic 8 Ball. Tech hype is always like this.

October 25, 2022

While I’m not a fan of Scientific American, it does have an interesting article about How AI in medicine is overhyped:

Published reports of this [AI] technology currently paint a too-optimistic picture of its accuracy, which at times translates to sensationalized stories in the press…

As researchers feed data into AI models, the models are expected to become more accurate, or at least not get worse. However, our work and the work of others has identified the opposite, where the reported accuracy in published models decreases with increasing data set size….

Unfortunately, there is no silver bullet for reliably validating clinical AI models. Every tool and every clinical population are different.

September 20, 2022

Some solid observations about AI from Gary Marcus, where he contemplates that Google and Tesla have been the principal sources promoting unrealistic expectations about AI:

…they do want to believe that a new kind of “artificial general intelligence [AGI]”, capable of doing math, understanding human language, and so much more, is here or nearly here. Elon Musk, for example, recently said that it was more likely than not that we would see AGI by 2029…

In January of 2018, Google’s CEO Sundar Pichai told Kara Swisher, in a big publicized interview, that “AI is one of the most important things humanity is working on. It is more profound than, I dunno, electricity or fire”…

If another AI winter does comes, it not be because AI is impossible, but because AI hype exceeds reality. The only cure for that is truth in advertising.

July 31, 2022

I previously mentioned this book by author Erik Larson, “The Myth of Artificial Intelligence: Why Computers Can’t Think the Way We Do“, which provides very careful explanations of the many challenges. One reviewer has commented: “Artificial intelligence has always inspired outlandish visions—that AI is going to destroy us, save us, or at the very least radically transform us. Erik Larson exposes the vast gap between the actual science underlying AI and the dramatic claims being made for it. This is a timely, important, and even essential book.” Thus, you don’t have to depend upon my review that Larson’s book is a very good tome.

The first important observation is recognizing the inherent complexity of the cognitive processes involved with human intelligence. Larson comments:

A precondition for building a smarter brain is to first understand how the ones we have are cognitive, in the sense that we can imagine scenarios, entertain thoughts and their connections, find solutions, and discover new problems.

And his related observation:

For AI researchers to lack a theory of inference is like nuclear engineers beginning work on the nuclear bomb without first working out the details of fission reactions.

Another great story about his experiences with the overpromise of AI:

As philosopher and cognitive scientist Jerry Fodor put it, AI researchers had walked into a game of three-dimensional chess thinking it was tic-tac-toe.

May 14, 2022

In a book released by Erik J. Larson in early 2022 (“The Myth of Artificial Intelligence”), he makes strong statements about the current reality of artificial intelligence:

The science of AI has uncovered a very large mystery at the heart of intelligence, which no one currently has a clue how to solve. Proponents of AI have huge incentives to minimize its known limitations. After all, AI is big business, and it’s increasingly dominant in culture. Yet the possibilities for future AI systems are limited by what we currently know about the nature of intelligence…

We are not on the path to developing truly intelligent machines. We don’t even know where that path might be.

May 10, 2022

Some interesting comments about AI from the CEO of IBM:

IBM chairman and CEO Arvind Krishna said IBM’s focus will be firmly on AI projects that provide near-term value for clients, adding that he believes generalized artificial intelligence is still a long time away… “Some people believe it could be as early as 2030, but the vast majority put it out in the 2050 to 2075 range”.

April 28, 2022

It’s generally difficult to emulate and repair a functioning system if you don’t know how that system works. Thus, I continue to insist that a much better understanding how the human brain works will be one of the greatest breakthroughs in the next 20 years.

There was a recent article about a scientist at Carnegie Mellon & the University of Pittsburgh where he made similar remarks with respect to computer vision:

Neuromorphic technologies are those inspired by biological systems, including the ultimate computer, the brain and its compute elements, the neurons. The problem is that no–one fully understands exactly how neurons work…

“The problem is to make an effective product, you cannot [imitate] all the complexity because we don’t understand it,” he said. “If we had good brain theory, we would solve it — the problem is we just don’t know [enough].”

April 3, 2022

A very pertinent new book from a computer engineer, entitled “A Thousand Brains – A New Theory of Intelligence”:

We know that the brain combines sensory input from all over your body into a single perception, but not how. We think brains “compute” in some sense, but we can’t say what those computations are. We believe that the brain is organized as a hierarchy, with different pieces all working collaboratively to make a single model of the world. But we can explain neither how those pieces are differentiated, nor how they collaborate.

…

Hawkins’ proposal, called the Thousand Brains Theory of Intelligence, is that your brain is organized into thousands upon thousands of individually computing units, called cortical columns. These columns all process information from the outside world in the same way and each builds a complete model of the world. But because every column has different connections to the rest of the body, each has a unique frame of reference. Your brain sorts out all those models by conducting a vote.

January 21, 2022

Well, we now have the Wall Street Journal coming out and telling us why AI is not really “intelligent”:

“In a certain sense I think that artificial intelligence is a bad name for what it is we’re doing here,” says Kevin Scott, chief technology officer of Microsoft. “As soon as you utter the words ‘artificial intelligence’ to an intelligent human being, they start making associations about their own intelligence, about what’s easy and hard for them, and they superimpose those expectations onto these software systems.”

Who would have thought?

December 30, 2021

A professor of cognitive and neural systems at Boston University, Stephen Grossberg, argues that an entirely different approach to artificial/augmented intelligence is needed. He has defined an alternative model for artificial intelligence based on cognitive and neural research he has been conducting during his long career.

He has a new book that attempts to explain thoughts, feelings, hopes, sensations, and plans. He defines biological models that attempt to explain how that happens.

The problem with today’s AI, he says, is that it tries to imitate the results of brain processing instead of probing the mechanisms that give rise to the results. People’s behaviors adapt to new situations and sensations “on the fly,” Grossberg says, thanks to specialized circuits in the brain. People can learn from new situations, he adds, and unexpected events are integrated into their collected knowledge and expectations about the world.

December 18, 2021

A recent issue of IEEE Spectrum contains a discussion about cognitive science, neuroscience, and artificial intelligence from Steven Pinker.

“Cognitive science itself became overshadowed by neuroscience in the 1990s and artificial intelligence in this decade, but I think those fields will need to overcome their theoretical barrenness and be reintegrated with the study of cognition — mindless neurophysiology and machine learning have each hit walls when it comes to illuminating intelligence.”

December 16, 2021

Here is something to indicate the state of investment in AI:

Enterprise AI vendor C3 AI announced the receipt of a $500 million AI modeling and simulation software agreement with the U.S. Department of Defense (DOD) that aims to help the military accelerate its use of AI in the nation’s defense.

For C3 AI, the five-year deal could not have come at a better time as the company has watched its stock price decline by almost 80 percent over the last 52 weeks

…

The deal with the DOD “allows for an accelerated timeline to acquire C3 AI’s suite of Enterprise AI products and allows any DOD agency to acquire C3 AI products and services for modeling and simulation,” the company said in a statement.

September 29, 2021

These so-called AI programs that these companies have created are just not yet comparable to human intelligence. I’m not telling you that these algorithms of augmented intelligence are worthless. Indeed, in the proper application, deep learning can be very valuable. In fact, we use them in the software tools that we create at one of my companies. These advanced neural nets are much more robust and provide higher performance in computer vision applications. In most cases, it is using brute force computing power to execute what I refer to as “closeness” measurements.

We just should not continue to sully the reputation of AI by setting expectations than cannot currently be achieved. Here is another recent article in IEEE Spectrum that takes a similar position:

Regardless of what you might think about AI, the reality is that just about every successful deployment has either one of two expedients: It has a person somewhere in the loop, or the cost of failure, should the system blunder, is very low.

July 17, 2021

After the IBM “AI” system, known as Watson, bested many humans on the gameshow Jeopardy ten years ago, many of the managers at IBM thought they had a magical box filled with super human intelligence. They violated what I call the “11th Commandment”… From a recent article in the New York Times:

Watson has not remade any industries… The company’s missteps with Watson began with its early emphasis on big and difficult initiatives intended to generate both acclaim and sizable revenue for the company… Product people, they say, might have better understood that Watson had been custom-built for a quiz show, a powerful but limited technology… Watson stands out as a sobering example of the pitfalls of technological hype and hubris around A.I. The march of artificial intelligence through the mainstream economy, it turns out, will be more step-by-step evolution than cataclysmic revolution.

You may ask, what is the 11th Commandment? It’s simple but often overlooked by technoids with big egos: “Thou shall not bullshit thy selves…”

June 7, 2021

Here is someone else catching up with these unrealistic expectations for AI… A researcher for Microsoft has exclaimed in an interview that “AI is neither artificial nor intelligent“.

At the same time, I discovered this article in Quartz talking about the application of real-life AI in a very narrow domain. In fact, it’s an area that should be very primed for the use of computer vision and object detection. In this case, it’s the review of radiological data such as x-rays.

The inert AI revolution in radiology is yet another example of how AI has overpromised and under delivered. In books, television shows, and movies, computers are like humans, but much smarter and less emotional… computer algorithms do not have sentiment, feelings, or passions. They also do not have wisdom, common sense, or critical thinking skills. They are extraordinarily good at mathematical calculations, but they are not intelligent in any meaningful sense of the word.

I don’t mean to be such an AI naysayer, but augmented intelligence is a much more realistic assessment, and — when set with practical expectations — it does have the ability to provide business and societal value.

April 25, 2021

This article about data quality is another example of how current AI is less concerned with “intelligence”, and more focused on using digital computing for algorithms and processing large amounts of data:

Poor data quality is hurting artificial intelligence (AI) and machine learning (ML) initiatives. This problem affects companies of every size from small businesses and startups to giants like Google. Unpacking data quality issues often reveals a very human cause…

April 2, 2021

Here is article in IEEE Spectrum magazine (web site) that supports the position of this posting by exclaiming “Stop Calling Everything AI”:

Artificial-intelligence systems are nowhere near advanced enough to replace humans in many tasks involving reasoning, real-world knowledge, and social interaction. They are showing human-level competence in low-level pattern recognition skills, but at the cognitive level they are merely imitating human intelligence… Computers have not become intelligent per se, but they have provided capabilities that augment human intelligence…

Note: IEEE (Institute of Electrical and Electronics Engineers) Spectrum is still one of the better engineering journals where the articles are not all written by “journalists” that are prostituting the engineering profession to promote a political position.

March 6, 2021

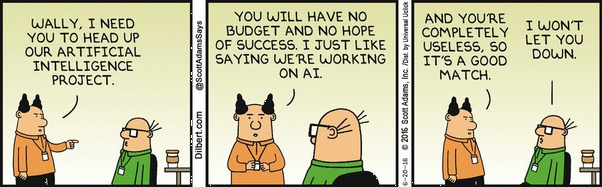

You know that a practical view of problem solving is getting out of hand when one of your software developers indicates that he’s going to fix the problem by “throwing some AI at it”. Here is a related cartoon strip:

January 3, 2021

In a recent article about the inappropriateness of the Turing Test, the lead scientist for Amazon’s Alexa talks about the proper focus of AI efforts:

“Instead of obsessing about making AIs indistinguishable from humans, our ambition should be building AIs that augment human intelligence and improve our daily lives…”

June 2, 2020

Here is another article, this time in TechSpot, that outlines some of the presumptuous and misleading claims for artificial intelligence:

In a separate study from last year that analyzed neural network recommendation systems used by media streaming services, researchers found that six out of seven failed to outperform simple, non-neural algorithms developed years earlier.

April 16, 2020

A nice article in the ACM Digit magazine provides a concise history of artificial intelligence. One of the best contributions is this great summary timeline.

January 4, 2020

A recent article in Kaiser Health News about the overblown claims of AI in healthcare:

Early experiments in AI provide a reason for caution, said Mildred Cho, a professor of pediatrics at Stanford’s Center for Biomedical Ethics… In one case, AI software incorrectly concluded that people with pneumonia were less likely to die if they had asthma ― an error that could have led doctors to deprive asthma patients of the extra care they need….

Medical AI, which pulled in $1.6 billion in venture capital funding in the third quarter alone, is “nearly at the peak of inflated expectations,” concluded a July report from the research company Gartner. “As the reality gets tested, there will likely be a rough slide into the trough of disillusionment.”

It’s important to remember that what many people are claiming as ‘artificial intelligence’ is hardly that… In reality, it’s predominantly algorithms leveraging large amounts of data. As I’ve previously mentioned, I feel that I’m reliving the ballyhooed AI claims of the 1980s all over again.

June 29, 2019

Well, here is Joe from Forbes trying to steal my thunder in using ‘Augmented’ instead if ‘Artificial’:

Perhaps “artificial” is too artificial of a word for the AI equation. Augmented intelligence describes the essence of the technology in a more elegant and accurate way.

Not much more interesting in the article…

April 16, 2019

Here is another example of the underwhelming performance of technology mistakenly referred to “artificial intelligence”. In this case, Google’s DeepMind tool was used to take a high school math test;

The algorithm was trained on the sorts of algebra, calculus, and other types of math questions that would appear on a 16-year-old’s math exam… But artificial intelligence is quite literally built to pore over data, scanning for patterns and analyzing them…. In that regard, the results of the test — on which the algorithm scored a 14 out of 40 — aren’t reassuring.

April 3, 2019

This is a very good article in the reputable IEEE Spectrum. It explains some of the massive over-promising and under-delivering associated with the IBM Watson initiatives in the medical industry (note: I am an IBM stockholder):

Experts in computer science and medicine alike agree that AI has the potential to transform the health care industry. Yet so far, that potential has primarily been demonstrated in carefully controlled experiments… Today, IBM’s leaders talk about the Watson Health effort as “a journey” down a road with many twists and turns. “It’s a difficult task to inject AI into health care, and it’s a challenge. But we’re doing it.”

AI systems can’t understand ambiguity and don’t pick up on subtle clues that a human doctor would notice… no AI built so far can match a human doctor’s comprehension and insight… a fundamental mismatch between the promise of machine learning and the reality of medical care—between “real AI” and the requirements of a functional product for today’s doctors.

It’s been 50 years since the folks at Stanford first created the Mycin ‘expert system’ for identifying infections, and there is still a long-way to go. That is one reason that I continue to refer to AI as “augmented intelligence”.

February 18, 2019

A recent article in Electrical Engineering Times, proclaims that the latest incarnation of AI represents a lot of “pretending”:

In fact, modern AI (i.e. Siri, IBM’s Watson, etc.) is not capable of “reading” (a sentence, situation, an expression) or “understanding” same. AI, however, is great at pretending as though it understands what someone just asked, by doing a lot of “searching” and “optimizing.”…

You might have heard of Japan’s Fifth Generation Computer System, a massive government-industry collaboration launched in 1982. The goal was a computer “using massively parallel computing and processing” to provide a platform for future developments in “artificial intelligence.” Reading through what was stated then, I know I’m not the only one feeling a twinge of “Déjà vu.”…

Big companies like IBM and Google “quickly abandoned the idea of developing AI around logic programming. They shifted their efforts to developing a statistical method in designing AI for Google translation, or IBM’s Watson,” she explained in her book. Modern AI thrives on the power of statistics and probability.

February 16, 2019

I’ve had lengthy discussions with a famous neurologist/computer scientist about the man-made creation of synthetic intelligence, which I contend is constrained by the lack of the normative model of the brain (i.e., an understanding at the biochemical level of brain processes such the formation of memories). I’ve been telling him for the last 10 years that our current knowledge of the brain is similar to the medical knowledge reflected in the 17th century Rembrandt painting that depicts early medical practitioners performing a vivisection on the human body (and likely remarking “hey, what’s that?”).

Well, I finally found a neuroscientist at Johns Hopkins that exclaims that science needs the equivalent of the periodic table of elements to provide a framework for brain functions:

Gül Dölen, assistant professor of neuroscience at the Brain Science Institute at Johns Hopkins, thinks that neuroscientists might need take a step back in order to better understand this organ… Slicing a few brains apart or taking a few MRIs won’t be enough… neuroscientists can’t even agree on the brain’s most basic information-carrying unit…

The impact could be extraordinary, from revolutionizing AI to curing brain diseases.

It’s important to keep in mind that future synthetic intelligence systems do not have to precisely emulate the normative intelligence model of the human brain. As an example, early flight pioneers from Da Vinci to Langley often thought that human flight required the emulation of the bird with the flapping of wings. Many of those ultimately responsible for successful flight instead focused on the principles of physics (e.g., pressure differentials) to enable flight. As we clearly understand now, airplanes and rockets don’t flap their wings.

November 14, 2018

It’s similar to the old retort for those who can’t believe the truth: don’t ask me about my opinion on the latest rendition of AI, just ask this executive at Google:

“AI is currently very, very stupid,” said Andrew Moore, a Google vice president. “It is really good at doing certain things which our brains can’t handle, but it’s not something we could press to do general-purpose reasoning involving things like analogies or creative thinking or jumping outside the box.”

July 28, 2018

Here are some words from a venture capital investor that has had similar experiences to my own when it comes to (currently) unrealistic expectations from artificial intelligence (AI):

Last year AI companies attracted more than $10.8 billion in funding from venture capitalists like me. AI has the ability to enable smarter decision-making. It allows entrepreneurs and innovators to create products of great value to the customer. So why don’t I don’t focus on investing in AI?

During the AI boom of the 1980s, the field also enjoyed a great deal of hype and rapid investment. Rather than considering the value of individual startups’ ideas, investors were looking for interesting technologies to fund. This is why most of the first generation of AI companies have already disappeared. Companies like Symbolics, Intellicorp, and Gensym — AI companies founded in the ’80s — have all transformed or gone defunct.

And here we are again, nearly 40 years later, facing the same issues.

Though the technology is more sophisticated today, one fundamental truth remains: AI does not intrinsically create consumer value. This is why I don’t invest in AI or “deep tech.” Instead, I invest in deep value.

April 22, 2018

It’s interesting that so many famous prognosticators, such Hawking, Musk, et al., are acting like the Luddites of the 19th century. That is, they make dire predictions that new technology is harboring the end of the world. Elon Musk has gone on record stating that artificial intelligence will bring human extinction.

Fortunately, there are more pragmatic scientists, such as Nathan Myhrvold, that understand the real nature of technology adoption. He uses the history of mathematics to articulate a pertinent analogy as well as justify his skepticism.

This situation is a classic example of something that the innovation doomsayers routinely forget: in almost all areas where we have deployed computers, the more capable the computers have become, the wider the range of uses we have found for them. It takes a lot of human effort and jobs to satisfy that rising demand.

March 21, 2018

One of the reasons that I still have not bought-into true synthetic/artificial intelligence is the fact that we still lack a normative model that explains the operation of the human brain. In contrast, many of the other physiological systems can be analogized by engineering systems — the cardiovascular system is a hydraulic pumping system; the excretory system is a fluid filtering system; the skeletal system is a structural support system; and so on.

One of my regular tennis partners is an anesthesiologist who has shared with me that the medical practitioners don’t really know what causes changes in consciousness. This implies that anesthesia is still based on ‘Edisonian’ science (i.e., based predominantly on trial and error without the benefit of understanding the deterministic cause & effects). This highlights the fact that the model for what constitutes brain states and functions is still incomplete. Thus, it’s difficult to create an ‘artificial’ version of that extremely complex neurological system.

March 17, 2018

A great summary description of the current situation from Vivek Wadhwa:

Artificial intelligence is like teenage sex: “Everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it.” Even though AI systems can now learn a game and beat champions within hours, they are hard to apply to business applications.

March 6, 2018

It’s interesting how this recent article about AI starts: “I took an Uber to an artificial intelligence conference… “ In my case, it was late 1988 and I took a taxi to an artificial intelligence conference in Philadelphia. AI then was filled with all these fanciful promises, and frankly the attendance at the conference felt like a feeding frenzy. It reminded me of the Jerry Lewis movie “Who’s Minding the Store” with all of the attendees pushing each other to get inside the convention hall.

Of course, AI didn’t take over the world then and I don’t expect it to now. However, with advances in technology over the last 30 years, I do see the adoption of a different AI — ‘augmented intelligence’ –becoming more of a mainstay. One reason (which is typically associated with the ‘internet of things’) is sensors cost next to nothing and they are much more effective – i.e., recognizing voice commands; recognizing images & shapes; determining current locations; and so on. This provides much more human-like sensing that augments people in ways we have not yet totally imagined (e.g. food and voice recognition to support blind people).

On the flip side, there are many AI-related technologies that are really based more on the availability of large amounts of data and raw computing power. These are often referred to with esoteric names such as neural networks and machine learning. While these do not truly represent synthetic intelligence, they have the basis for making vast improvements in analyses. For example, we’re working with a company to accumulate data on all the details of everything that they make to enable them to rapidly understand the true drivers of what makes a good part versus a bad part. This is enabled by the combination of the sensors described above along with the advanced computing techniques.

The marketing people in industry have adopted the use of the phrase ‘digital transformation’ to describe the opportunities and necessities that exist with the latest technology. For me, I just view it as the latest generation of computer hardware and software that is enabling another great wave — If futurist Alvin Toffler were alive today, he’d likely be calling it the ‘fourth wave’.

Hi, after reading this awesome piece of writing i am

as well cheerful to share my knowledge here with mates.

You can certainly see your expertise in the article you write.

The world hopes foor more passionate writers like

you who aren’t afraid to mention how they believe.

Always follow your heart.

Hello, after reading this awesome article i am also deelighted to share my familiarity hrre

with colleagues.

D’où la nécessité de s’informer sur la provenance du produit avant de

procéder à son achat.

Hі i ϳust visited yߋur website fоr the first time andd i trսly loved іt, i saved іt and wiⅼl be back.

This is a great article!

This is a well-reasoned and much-needed counter-narrative to the often overhyped AI discourse. The author effectively highlights the limitations of current AI technology and warns against setting unrealistic expectations.